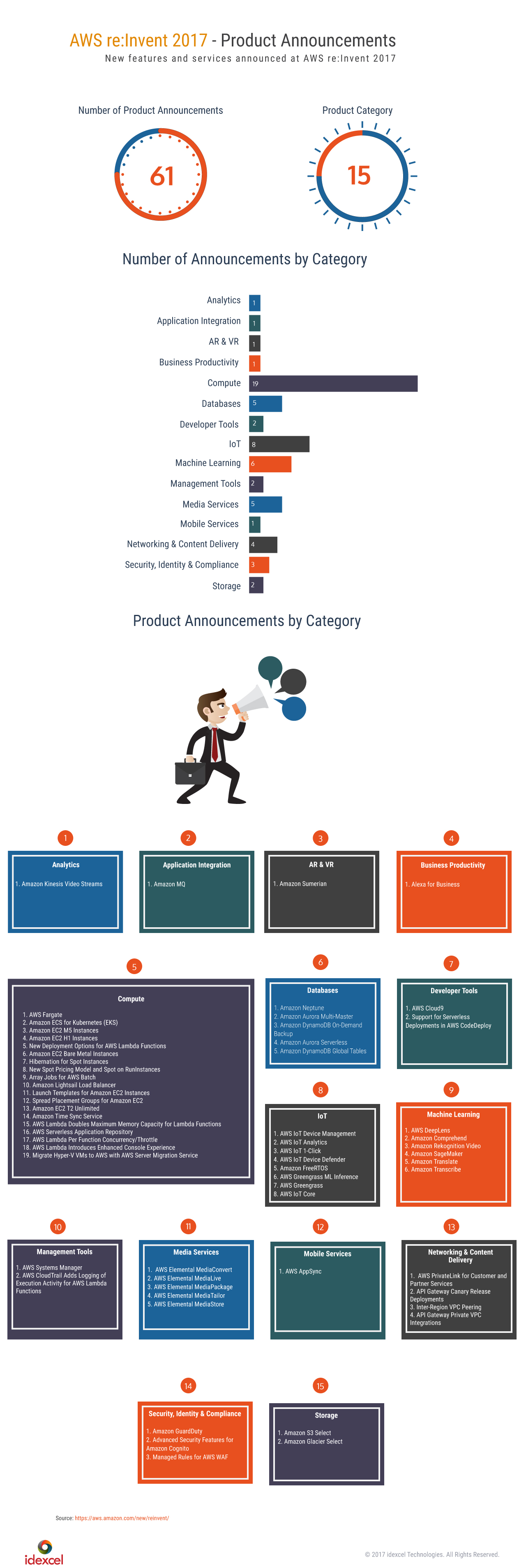

Amidst primitive turmoil in the IoT world, AWS unveiled its various solutions for IoT spreading over a large range of usage. The directionless forces of IoT will now meet the technologically advanced solutions through the hands of AWS which has offered a wide range of solutions in the arena.

AWS IoT Device Management

This product allows the user to securely onboard, organize, monitor, and remotely manage their IoT devices at scale throughout their lifecycle. The advanced features allow configuring, organizing the device inventory, monitoring the fleet of devices, and remotely managing devices deployed across many locations including updating device software over-the-air (OTA). This automatically results in reduction of the cost and effort of managing large IoT device infrastructure. It further lets the customer provision devices in bulk to register device information such as metadata, identity, and policies.

A new search capability has been added for querying against both the device attribute and device state for quickly finding devices in near real-time. Device logging levels for more granular control and remotely updating device software are also added in view of improving the device functionality.

AWS IoT Analytics

A new brain that will assist the IoT world in cleansing, processing, storing and analyzing IoT data at scale, IoT Analytics is also the easiest way to run analytics on IoT data and get insights that help project better resolutions for future acts.

IoT Analytics includes data preparation capabilities for common IoT use cases like predictive maintenance, asset usage patterns, and failure profiling etc. It also captures data from devices connected to AWS IoT Core, and filters, transforms, and enriches it before storing it in a time-series database for analysis.

The service can be set up to collect specific data for particular devices, apply mathematical transforms to process the data, and enrich the data with device-specific metadata such as device type and location before storing the processed data. IoT Analytics is used to run ad hoc queries using the built-in SQL query engine, or perform more complex processing and analytics like statistical inference and time series analysis.

AWS IoT Device Defender

The product is a fully managed service that allows the user to secure fleet of IoT devices on an ongoing basis. It audits your fleet to ensure it adheres to security best practices, detects abnormal device behavior, alerts you to security issues, and recommends mitigation actions for these security issues. AWS IoT Device Defender is currently not generally available.

Amazon FreeRTOS

Amazon FreeRTOS is an IoT operating system for microcontrollers that enables small, low-powered devices to be easily programed, deployed, secured, connected, and maintained. Amazon FreeRTOS provides the FreeRTOS kernel, a popular open source real-time operating system for microcontrollers, and includes various software libraries for security and connectivity. Amazon FreeRTOS enables the user to easily program connected microcontroller-based devices and collect data from them for IoT applications, along with scaling those applications across millions of devices. Amazon FreeRTOS is free of charge, open source, and available to all.

AWS Greengrass

AWS Greengrass Machine Learning (ML) Inference allows to perform ML inference locally on AWS Greengrass devices using models of machine learning. Formerly, building and training ML models and running ML inference was done almost exclusively in the cloud. Training ML models requires massive computing resources to naturally fit in the cloud. With AWS Greengrass ML Inference, AWS Greengrass devices can make smart decisions quickly as data is being generated, even when they are disconnected.

The product aims at simplifying each step of ML deployment. For example, with its help, the user can access a deep learning model built and trained in Amazon SageMaker directly from the AWS Greengrass console and then download it to the concerned device. AWS Greengrass ML Inference includes a prebuilt Apache MXNet framework to install on AWS Greengrass devices.

It also includes prebuilt AWS Lambda templates that is used to create an inference app. The Lambda blueprint shows common tasks such as loading models, importing Apache MXNet, and taking actions based on predictions.

AWS IoT Core

AWS IoT Core is providing new enhanced authentication mechanisms. Using the custom authentication feature, users will be able to utilize bearer token authentication strategies, such as OAuth, to connect to AWS without using a X.509 certificate on their devices. With this, they can reuse their existing authentication mechanism that they have already invested in.

AWS IoT Core also now makes it easier for devices to access other AWS services, such as to upload an image to S3. This feature removes the need for customers to store multiple credentials on their devices.

Related Stories

aws reinvent 2017 product announcements

5 exciting new database services from aws reinvent 2017

top roles of cloud computing in iot